Machine Learning Use Case

Tech: Azure, Nomad, Consul, Linux/Ubuntu, Terraform

We are developing a platform with a client that ingests photographs into a Machine Learning application for analysis. One of the difficulties we faced was a question of variable scalability – the workload is, by nature, going to be “bursty”.

It might have 100 jobs to process all at once, and then sit idle for hours at a time.

Furthermore, this queue grows based on unknown properties – due to the nature of the project, it could quite literally be affected by the weather, or even by spotty Internet availability at customer work sites. There is no way to predict when we’ll need more processing capabilities.

The “old school” approach would be to purchase more servers so the operating capacity at any given time can support the maximum workload. This is a difficult and very expensive solution. We can’t predict what the maximum workload might be, and GPU servers to power Machine Learning jobs are pricey. Leaving these servers running idle when there are few or no jobs to process would cost the client tens of thousands of dollars each month!

Our platform must have the flexibility and burst-ability built into it to handle the additional workload when needed, and it has to be set up to automatically scale up and down to meet the clients needs exactly when they have them.

Azure Cloud Solution

We accomplish this automatically scalable infrastructure with Azure Virtual Machines and a handful of enterprise capable tools.

The VM is attached to a scale set, defined with Terraform. This allows us to make a set of rules for when the scale set will increase or decrease in number based on the properties of the incoming jobs and the machine sizes. We can now proactively launch new VMs as needed and decommission them when not.

We use Packer – a Hashicorp product – to take a raw Ubuntu image, overlay our software on top of it, and package it for Azure Managed Disks. These are then plugged into the scale set and deployed to the new VMs when launched. An additional benefit to this approach is that we know our Machine Learning servers are always running the latest software and the latest ML models without having to manually run server updates – we simply update the machine image, and any time a VM is spun up to handle a job it pulls the most up to date image.

When a new job is submitted through our software, we dispatch the job to our scheduling environment. It ultimately acts as a sort of queuing system. It looks at the current infrastructure, determines if a machine is available to process the job, and if so it allocates the work to that running machine. If no machine is available it uses the scale set rules we applied to determine if it should spin up a new VM.

Eventually we will reach a steady state in which there are no additional jobs coming in and we do not need additional machines. This is all handled in the job scheduling layer (Nomad, Consul, and our own custom application code).

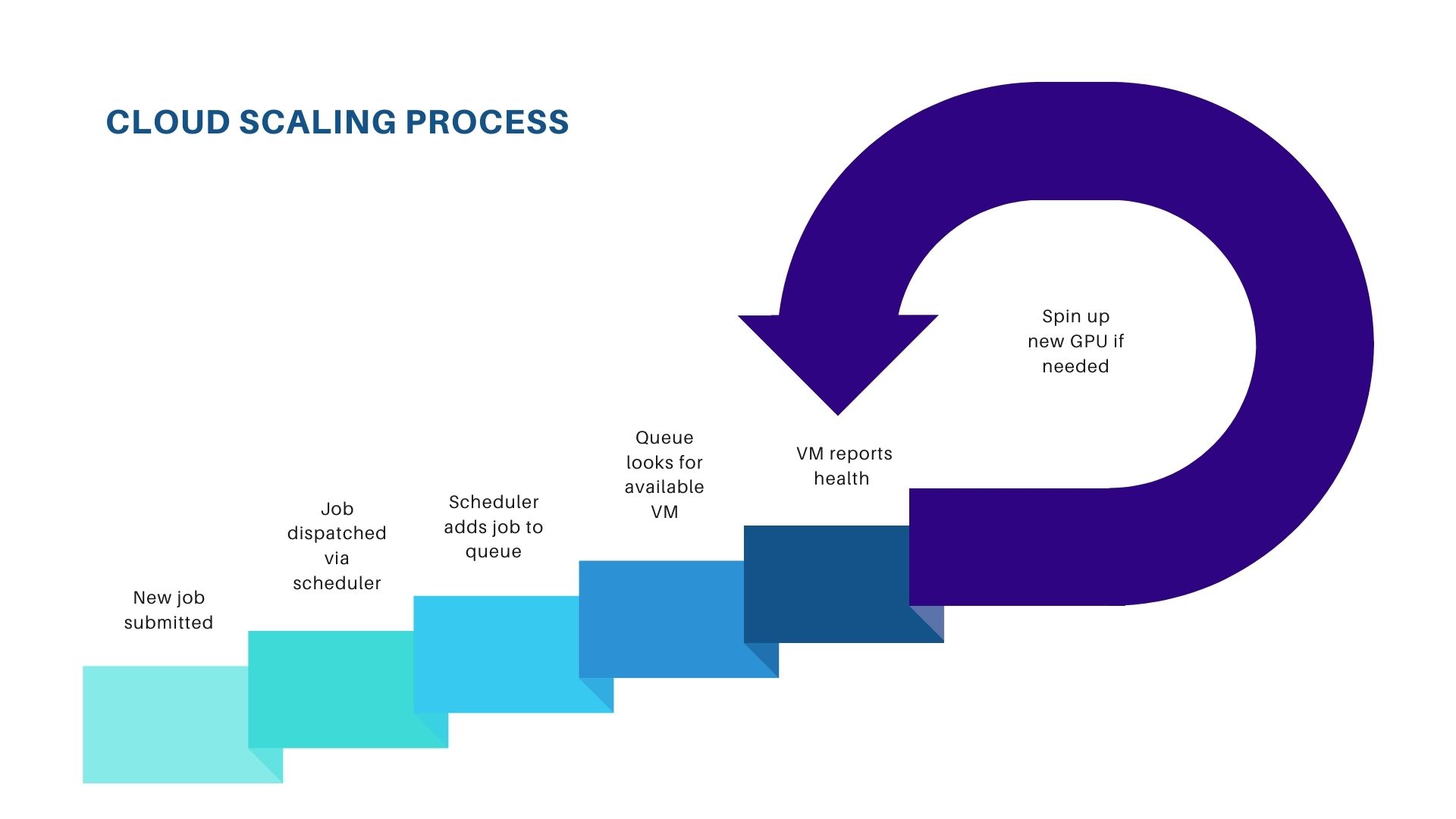

Our scaling process involves several steps:

- A new Machine Learning job is created in our application

- The software dispatches the job via our scheduling tools

- The scheduler adds it into the scheduling queue

- The queue looks for existing VM availability, spinning up a new machine if necessary

- The VMs continually report their health (including their resource usage and job status) back to the scheduler, which uses those values to scale the infrastructure up or down accordingly.

Flexible Software

The end result of this scalable infrastructure is that the client is not spending money on idle machines, while still being able to handle whatever workload they may throw at it at any given time. When the job workload requires, they get the machines they need, and when they don’t need them, the infrastructure is scaled down.

If you need assistance building a flexible cloud infrastructure for your software project, please reach out, we would be happy to consult with you.

If you are thinking about building or buying software, take a look at our blog comparing the two options: Custom Software Development Versus Commercial Off The Shelf (COTS).